On Adversarial Patches: Real-World Attack on ArcFace-100 Face Recognition System

Abstract

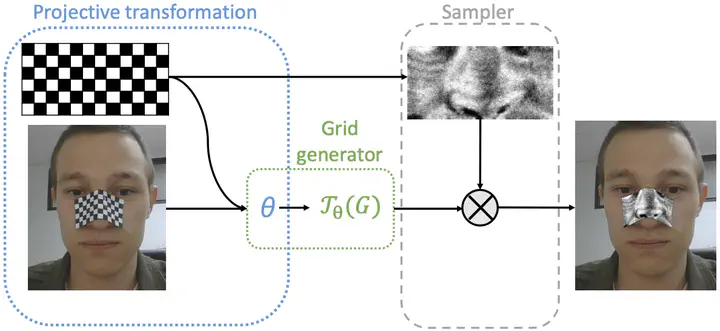

Recent works showed the vulnerability of image classifiers to adversarial attacks in the digital domain. However, the majority of attacks involve adding small perturbation to an image to fool the classifier. Unfortunately, such procedures can not be used to conduct a real-world attack, where adding an adversarial attribute to the photo is a more practical approach. In this paper, we study the problem of real-world attacks on face recognition systems. We examine security of one of the best public face recognition systems, LResNet100E-IR with ArcFace loss, and propose a simple method to attack it in the physical world. The method suggests creating an adversarial patch that can be printed, added as a face attribute and photographed; the photo of a person with such attribute is then passed to the classifier such that the classifier’s recognized class changes from correct to the desired one. Proposed generating procedure allows projecting adversarial patches not only on different areas of the face, such as nose or forehead but also on some wearable accessory, such as eyeglasses.