Robustness Threats of Differential Privacy

Abstract

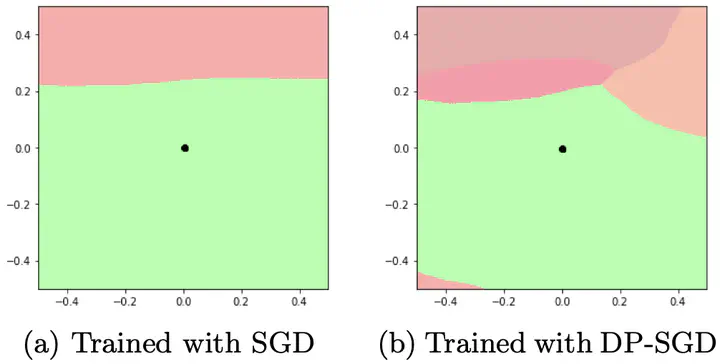

Differential privacy (DP) is a gold-standard concept of measuring and guaranteeing privacy in data analysis. It is well-known that the cost of adding DP to deep learning model is its accuracy. However, it remains unclear how it affects robustness of the model. Standard neural networks are not robust to different input perturbations: either adversarial attacks or common corruptions. In this paper, we empirically observe an interesting trade-off between privacy and robustness of neural networks. We experimentally demonstrate that networks, trained with DP, in some settings might be even more vulnerable in comparison to non-private versions. To explore this, we extensively study different robustness measurements, including FGSM and PGD adversaries, distance to linear decision boundaries, curvature profile, and performance on a corrupted dataset. Finally, we study how the main ingredients of differentially private neural networks training, such as gradient clipping and noise addition, affect (decrease and increase) the robustness of the model.