Nonparametric Uncertainty Quantification for Single Deterministic Neural Network

Abstract

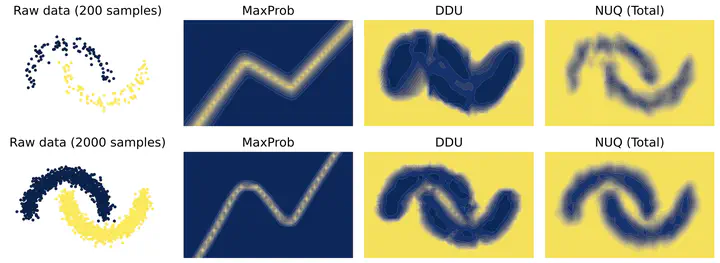

This paper proposes a fast and scalable method for uncertainty quantification of machine learning models’ predictions. First, we show the principled way to measure the uncertainty of predictions for a classifier based on Nadaraya-Watson’s nonparametric estimate of the conditional label distribution. Importantly, the approach allows to disentangle explicitly aleatoric and epistemic uncertainties. The resulting method works directly in the feature space. However, one can apply it to any neural network by considering an embedding of the data induced by the network. We demonstrate the strong performance of the method in uncertainty estimation tasks on text classification problems and a variety of real-world image datasets, such as MNIST, SVHN, CIFAR-100 and several versions of ImageNet.